Alex, Barney and I have been making an responsive sound environment. The idea is that when people walk into the installation space, their 'skeleton' is assigned an instrument from the currently playing music track.

As they move around, their movements control effects on that instrument only. When another person enters the space, they are assigned another instrument and each can continue to control their own instrument independently of each other, each person contributing to the overall sound.

With multiple people controlling different aspects of the sound, it could easily get a bit chaotic. So we are keeping some tracks fixed, playing back a blanket of sound against which the other controlled instruments can springboard.

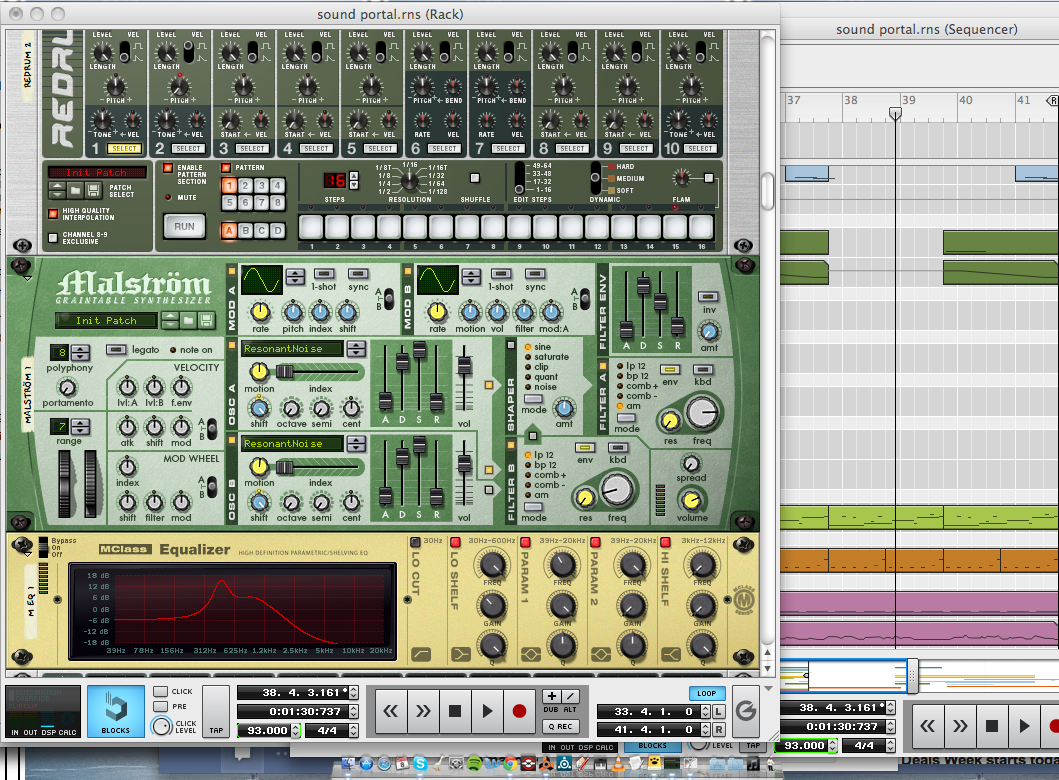

Barney has made some mellow electronic tracks, with looping melodies. The idea is that there will be some pace and a connection with the electronic music taking place in the rest of the venue. He has used Reason to compose the music and render out individual tracks for us to cut apart in realtime in Max/MSP.

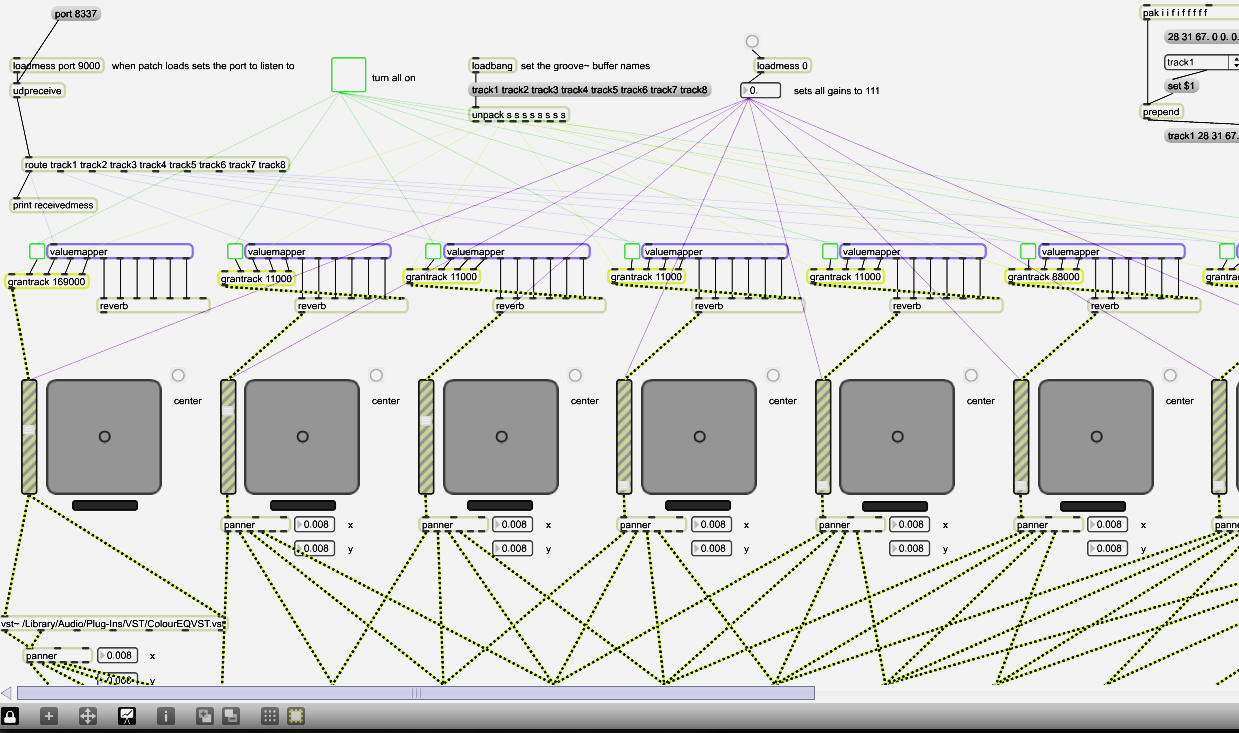

Alex and I have created a Max/MSP patch to handle interaction with the sound environment. It's kind of the go-between for the music against the interaction values received over the network from the graphics server.

The Max/MSP patch operates on up to 8 rendered tracks (instruments) for each of Barney's compositions - each track is controlled by one Kinect skeleton. Each track has up to 9 controllable attributes (reverb / granular synthesis / VSTs). So each Kinect skeleton controls 9 attributes on a single track of the currently playing composition.

One of the key parts of building this environment has been modularising Alex's original granular synth and reverb patches, so that they can be re-used with different settings and against different buffers (above).

We also created a 4-way panning system based on VBAP to allow us to pan sounds around the four speakers in our installation environment. Each rendered track can be panned individually. We'll be experimenting later today to figure out whether this panning should also be controlled by OSC data or some built-in pattern related to the sounds.