The Kinect V2 depth sensor is now widely available, but bound to Windows. I've written an addon which simplifies getting Kinect V2 skeletal data onto a Mac, so you can manipulate it in openFrameworks.

You'll need two computers - a Windows and a Mac - and a network connection between them. You won't have to write any code in Windows, just run a utility.

Then just launch an openFrameworks project on your Mac, include the addon, and you will have access to a simple template for rendering the skeletal data in realtime.

A bit of background

The problem this addon tries to solve is that the Kinect V2 really needs to run on Windows 8.1 (and using a USB3 port). If you develop on a Mac, you have to switch your operating system - it's the only way.

But it's not even that simple - let's say you switch to Windows, and want to use openFrameworks. You then have to learn Visual Studio, which is really is not the most appealing to the openFrameworks community.

Even if you do that, the solutions available to get sensor data into openFrameworks are patchy and immature at best. So you might be better off learning WPF / C#, or DirectX / C++ so you can work with the well-supported managed APIs.

That's a lot to learn. And it ties you even further into Windows-only code.

A simpler solution

If you only need skeletal and gesture data, there's a simpler way. The addon ofxKinectV2-OSC helps you get realtime skeletal data from the V2 sensor into openFrameworks on your Mac.

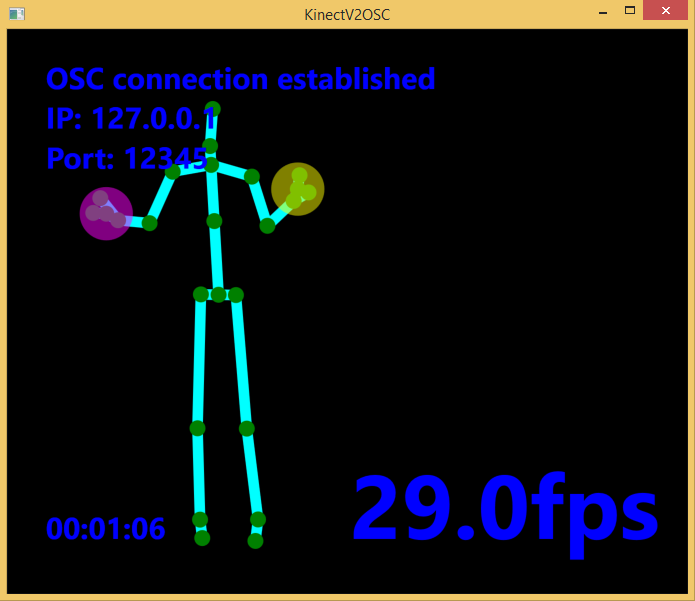

The way it works is this. You have a Windows machine and a Mac, running side-by-side on the same network. On the Windows machine you download and run this simple utility (written in WPF). It broadcasts all the skeletal information over the network using OSC.

It sends all information every frame, so you don't have to configure it or edit code.

Over on the Mac, you can clone ofxKinectV2-OSC into your addons directory, and it will read in all the OSC data and use it to populate an object model. You can then query that object model as you wish:

ofxKinectV2OSC kinect;

void setup() {

kinect.setup(PORT_NUMBER);

}

//This draws the left hand of each skeleton to the screen

void draw() {

vector<Skeleton>* skeletons = kinect.getSkeletons();

for(int i = 0; i < skeletons->size(); i++) {

Skeleton* skeleton = &skeletons->at(i);

Joint handLeft = skeleton->getHandLeft();

ofCircle(handLeft.getPoint(), 25);

}

}

That's it. You can get the 3-dimensional position of all joints, the tracking state of each joint (Tracked, NotTracked or Inferred), and the hand open/closed status of each hand.

Using the included example code

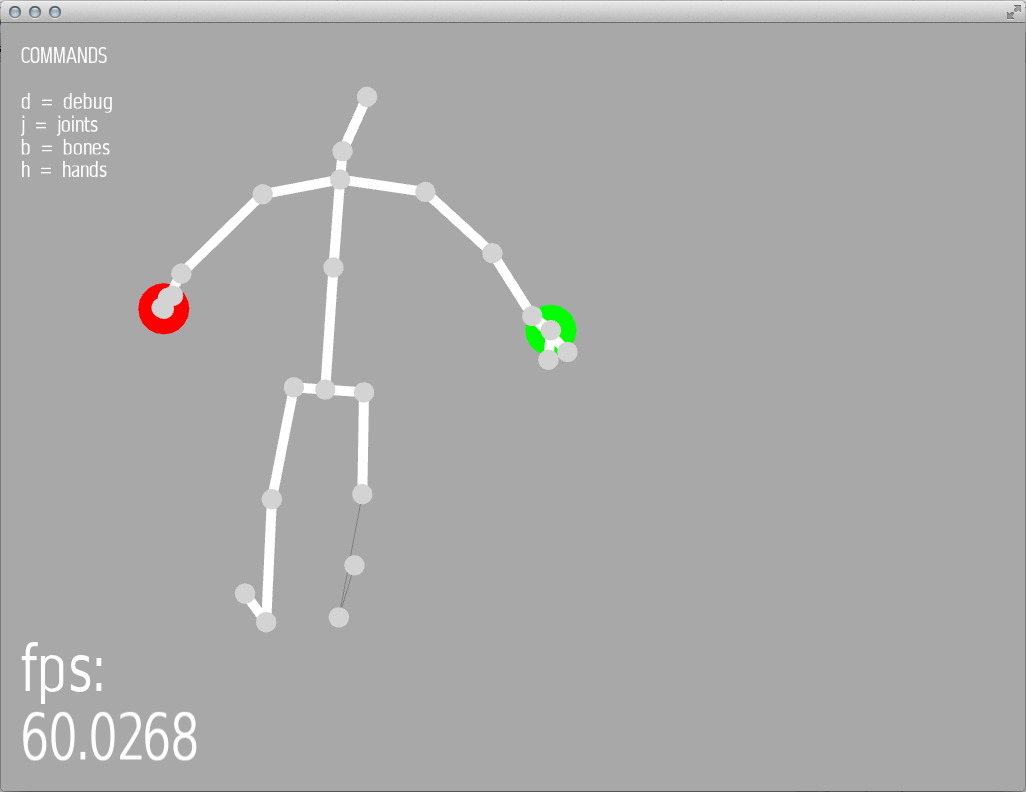

The addon comes with example code, and a BodyRenderer class you can edit to draw the skeleton how you like:

This screengrab shows the skeleton being rendered in openFrameworks, with confident bones in thick white and less-confident bones in thin gray. One hand is open (green), and the other is closed (red).

Going forward

There are a huge number of gestures the Kinect V2 SDK supports, plus vendor addons, and these can all be added going forward. I'm open to collaborations / pull requests to develop the addon and utility further.

For those of you in New York, we will be experimenting with this further over in the lab on Wednesdays. Join the meetup if you want to come and try out the gear for yourself.